Less than 15% of Twitch user reports last year led to enforcement actions

Streaming site's transparency report shows only 2% of hateful conduct and harassment reports were acted upon

Twitch is committing to regularly releasing data on its community moderation and safety efforts, beginning with yesterday's release of the streaming service's first global transparency report.

"Creating a Transparency Report is an important measure of our accountability -- it requires being honest about the obstacles we face and how we are working to resolve them to improve safety on Twitch," the company said in an accompanying blog post. "Moving forward, we'll be releasing two transparency reports a year so we can track our progress as a community."

The report laid out a number of processes, policies, and features Twitch has implemented on this front, such as the Automod tool that streamers can set up to pre-screen user chat and automatically block certain phrases or words. Throughout 2020, human moderation was a more popular option than Automod, but most channels -- 93% in the first half of 2020 and 96% in the second half -- had one or both forms of moderation.

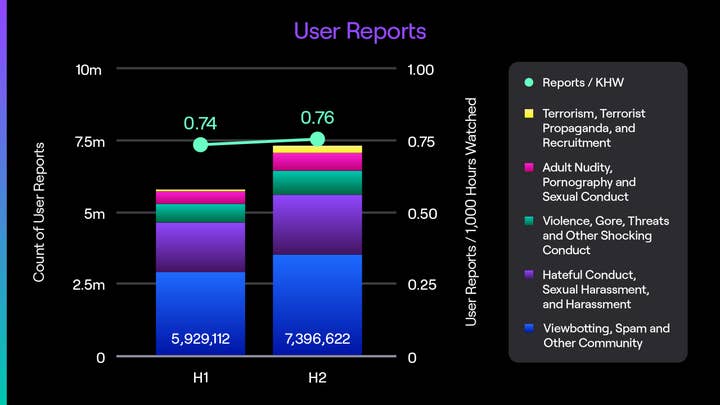

Twitch's report also addressed user reports of violations, noting that there were a total of 13.3 million total reports across the service in 2020, which works out to about 0.75 reports for every 1,000 hours of content watched on Twitch.

However, those reports don't always lead to action.

Twitch reported 1.99 million enforcement actions taken in 2020, from warnings to temporary suspensions to indefinite suspensions. That works out to less than 15% of all reports that led to enforcement, but these numbers don't necessarily consider the impact of multiple reports made about the same incident, or enforcements that came about from Twitch's "machine detection of harmful content" rather than a user report.

One thing that seems clear is not all types of report are equally likely to lead to enforcement. Twitch gave exact figures for the number of enforcement actions taken in each category, but did not give the exact number of reports in each. Instead, it included a bar graph with a breakdown of the categories that gives enough information for some rough math.

(GamesIndustry.biz requested the exact numbers but a Twitch representative told us, "We're not able to share a breakdown of the absolute number of reports by type, but the graph should give you a sense of the proportion of each.")

The most frequently reported category of offense -- and far and away the most likely to lead to consequences -- was "Viewbotting, Spam, and Other Community" violations. There were roughly 6.6 million reports in the category last year and 1.72 million enforcements, so as much as 26% of reports were acted upon.

The next most common category of user report was for "Hateful Conduct, Sexual Harassment, and Harassment." Twitch's graph suggests it received roughly 3.9 million reports in that category over the course of 2020, but just 80,767 -- about 2% -- received so much as a warning.

"Violence, Gore, Threats, and Other Shocking Conduct" saw roughly 1.5 million reports lodged over the year, but with just 11,254 enforcement actions, its 0.75% enforcement rate was the lowest of the categories we looked at apart from terrorism.

Our best guess based on the graph is that "Adult Nudity, Pornography, and Sexual Conduct" generated about 1.1 million reports, with 27,394 actions taken making for an enforcement rate around 2.5%.

The "Terrorism, Terrorist Propaganda, and Recruitment" category had so few reports we aren't comfortable calculating even a ballpark enforcement percentage, but it appeared to be significantly under 500,000.

Twitch said it had 87 enforcement actions in the category over the course of the year, 77 of them for showing terrorist propaganda and the remaining 10 for "glorifying or advocating acts of terrorism, extreme violence or large-scale property destruction."

Twitch failing to act on reports of sexual harassment and misconduct on the platform and in the workplace was a recurring theme of a report we ran last year based on interviews and personal accounts from those who worked or appeared on the platform.